The Parallel Engine: Turning Theory into World Models

PixVerse-R1 is the industrial engine for the Neuro-Narrative Age. A deep dive into the 1080P real-time response engine and the move from video clips to infinite, stateful simulations.

PixVerse-R1 is the industrial engine for the Neuro-Narrative Age. A deep dive into the 1080P real-time response engine and the move from video clips to infinite, stateful simulations.

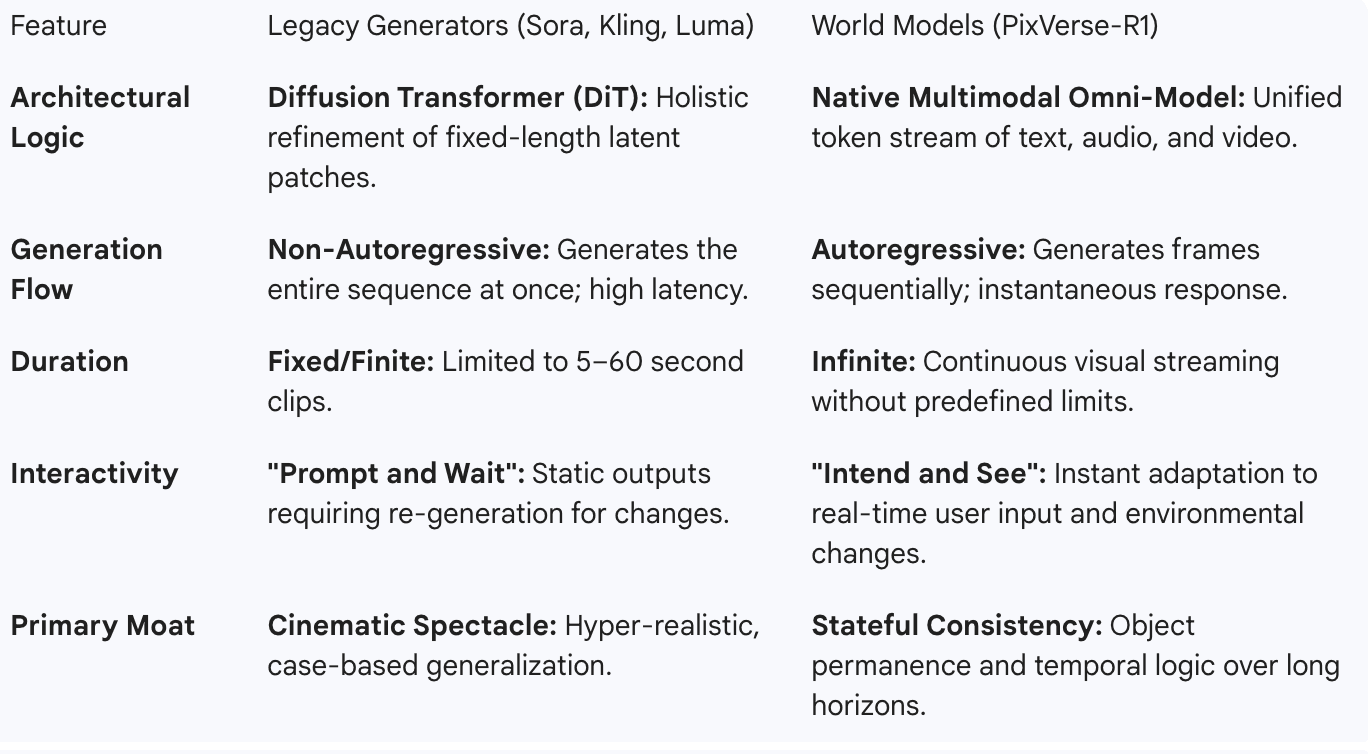

For years, AI video has been a collection of isolated artifacts. We measured success by the fidelity of a four-second render. PixVerse-R1 shatters this constraint through an Autoregressive Mechanism and Memory-Augmented Attention.

Instead of generating a static file through holistic refinement of an entire sequence from noise, the system sequentially predicts subsequent frames, achieving unbounded visual streaming that maintains total physical consistency over long horizons. In industrial terms, this is the move from a "Product" to a "Process". It is the difference between a pre-rendered cutscene and a persistent world that remembers its own state, allowing characters and environments to evolve without losing their structural integrity.

To understand why this is a categorical shift, we must look at how the engine room has been rebuilt.

The "Neuro-Narrative" requires a system that can handle the complexity of human interaction across every sensory layer. Traditional AI models work in silos, but PixVerse-R1 utilizes an Omni Native Multimodal Foundation Model.

This architecture unifies text, audio, image, and video into a single continuous stream of tokens. This is the System-Facilitated core in action. Because it is trained end-to-end across heterogeneous tasks without intermediate interfaces, it prevents error propagation and ensuring robust scalability. It is an engine that internalizes the physical laws and dynamics of the real world to synthesize a consistent, responsive "parallel world" in real-time.

The most significant hurdle for B2B and luxury brands has always been latency. If an experience isn't instant, it isn't immersive. PixVerse-R1’s Instantaneous Response Engine (IRE) re-architects the sampling process to deliver high-resolution 1080P video in real-time.

By implementing Temporal Trajectory Folding, the engine reduces traditional sampling steps from dozens down to merely one to four. This provides the ultra-low latency required for Interactive Cinema and AI-Native Gaming, where environments must adapt fluidly to user intent without a "loading" screen. We have officially crossed the threshold where generation and interaction are tightly coupled, creating a new medium where visual content responds instantly to user intent.

This technology allows our agency to deliver on the "Horizon" predictions we've set for 2027. We are moving beyond the "Explainer Video" and into Immersive Simulations:

Interactive Media: AI-native games and interactive cinematic experiences where narratives evolve dynamically.

Adaptive Training: Real-time learning and training environments that function as persistent, interactive worlds.

Persistent Branding: Luxury tech launches that function as persistent environments, reducing the distance between human intent and system response.

While our previous exploration focused on the human side of this shift—the empathy, the memory, and the "Forensic Audience"—the arrival of PixVerse-R1 is about the Power. It is the computational substrate that makes Storyliving possible. Over extended sequences, minor prediction errors may still accumulate, potentially compromising structural integrity.

This is where Authorship remains the final moat. We are no longer just filmmakers; we are the architects of the Parallel World. We are no longer waiting for the future to render. It is happening in real-time.