The Neuro-Narrative Age

From Storytelling to Storyliving. A 2,000-word deep dive into the Neuro-Narrative age, where AI-driven "Game Masters," BCI interfaces, and cloud-native XR dissolve the screen into an adaptive, multisensory reality.

From Storytelling to Storyliving. A 2,000-word deep dive into the Neuro-Narrative age, where AI-driven "Game Masters," BCI interfaces, and cloud-native XR dissolve the screen into an adaptive, multisensory reality.

The Neuro-Narrative Age

As the screen dissolves, we move from the transmission of data to the orchestration of adaptive reality.

The domain of human storytelling is currently undergoing its most radical transformation since the invention of language itself. We are transitioning from the era of Storytelling—defined by the linear, broadcast transmission of static information from author to audience—to the era of Storyliving. This new paradigm is characterized by a dynamic, bi-directional, and neuro-adaptive exchange between human consciousness and responsive artificial intelligence. By 2030, the immersive entertainment market will not merely be a sector of the media industry but the foundational interface for digital interaction, potentially expanding the market by up to 20%. This shift is driven by the convergence of Extended Reality hardware achieving retina-resolution, Agentic AI capable of real-time narrative generation, and neuro-adaptive interfaces that dissolve the latency between human intent and machine action.

The defining characteristic of the 2025–2030 epoch is the dissolution of the screen as a physical boundary. Technology is migrating from an external tool into an ambient layer of reality, facilitated by advances in spatial computing and sensory interfaces. This spatial computing revolution is not merely about better displays; it is about a fundamental re-architecting of how information is perceived and interacted with. While early VR required the user to exit physical reality to enter a digital one, the current generation of devices prioritize high-fidelity passthrough. This technical capability allows digital narratives to overlay the physical world, creating hybrid realities where fictional characters can inhabit the user's actual living room.

Algorithms must now perform real-time scene understanding—identifying furniture, doors, or pets—and weave them into the narrative. For example, a horror narrative might use the user's actual hallway as the setting for a procedural scare, scaling the creature’s size to fit the physical dimensions of the room. The hardware itself is bifurcating into two distinct categories: high-fidelity Mixed Reality headsets that use cameras to reconstruct the world, and lightweight Augmented Reality glasses that project light onto the user's view of the real world. By 2030, the emergence of light field displays that project 4D images directly onto the retina is expected to eliminate the need for bulky screens entirely.

To truly trick the brain into accepting a digital reality—to achieve true presence—the interface must bypass the eyes and ears and speak directly to the nervous system. The industry is moving from audiovisual to multisensory and eventually neural interfaces. By 2030, BCI technology is projected to transition from medical necessity to high-end consumer luxury. Passive BCI systems monitor the user's cognitive state in real-time, analyzing brainwaves and heart rate to determine if the user is stressed, focused, bored, or excited. This data feeds the neuro-adaptive story engine, allowing the narrative to automatically adjust its intensity or introduce plot twists to maintain optimal engagement.

Active BCI allows users to manipulate the environment through thought and intent. Thinking about moving an object triggers the action, achieved by decoding motor cortex signals that precede physical movement. Next-generation haptics shatter the glass wall of the screen by simulating texture, weight, and temperature. Ultrasound haptics use focused sound waves to create tactile sensations in mid-air, allowing users to touch holograms without wearing gloves. Multisensory integration combines these visuals with scent, wind, and vibration to trigger deep memory centers. The scent of rain or gunpowder can ground a user in a scene more effectively than high-resolution graphics.

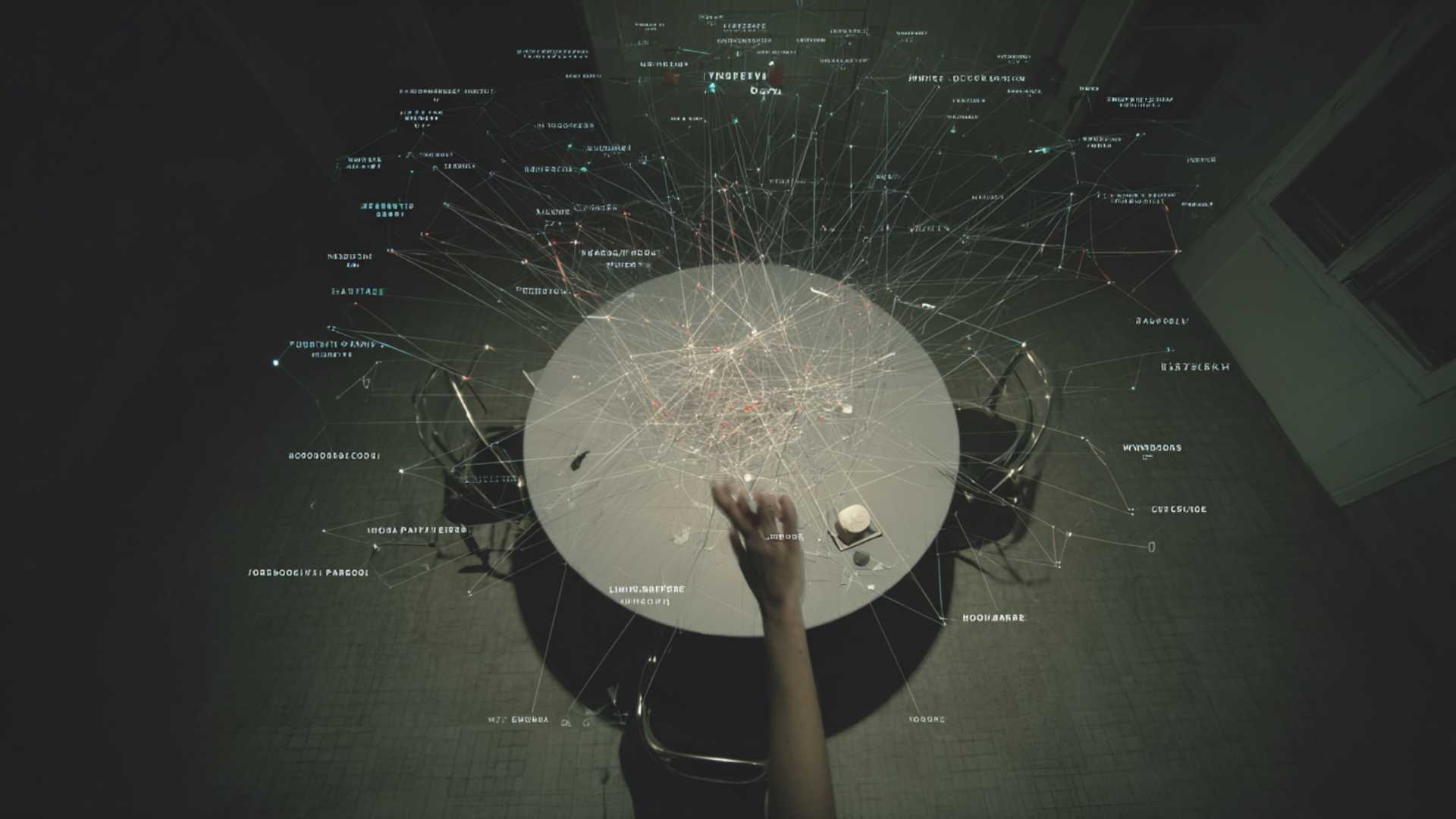

We are moving from author-led narratives that are fixed and linear toward system-facilitated experiences that are probabilistic and co-created. This transition is anchored by the Generative Game Master (GGM), an orchestration layer that coordinates various specialized sub-agents. The Narrative Agent manages the plot arc, ensuring the story hits necessary emotional beats regardless of player deviation. The World Agent simulates the physics and ecology, ensuring that if it rains in one area, the river levels in another must rise. Character Agents control individual NPCs, giving them distinct personalities and motivations that allow them to improvise dialogue in real-time.

To prevent narrative breakdown, the industry is adopting Neuro-Symbolic AI. This hybrid approach allows for narratives that are creatively boundless via neural layers while remaining logically sound through symbolic rule enforcement. The symbolic component acts as a scaffold, tracking every variable from health levels to inventory, ensuring that AI-generated actions never contradict established lore or logic. This architecture allows the story to emerge from the collision of player choices, NPC goals, and world constraints rather than following a pre-written path.

The role of the audience is shifting from voyeur to co-creator. In the generative age, audiences expect to influence the media they consume, evolving into proactive prosumers who participate in the production of the content. This engagement creates deeper emotional investment, as users who feel they have built part of the world are significantly more loyal. However, infinite agency can lead to decision fatigue, where the cognitive load becomes overwhelming. Successful narratives in 2030 will likely use soft agency, where the AI anticipates what the user wants to do and facilitates it.

Immersive storytelling also acts as a cognitive calibrator. VR-induced empathy literalizes the act of putting a user in another’s shoes through embodied cognition. The multisensory nature of future XR hijacks the brain’s hippocampal processes, creating memories of fiction that are encoded almost as deeply as real-world experiences. This blurs the distinction between what was watched and what was lived, leading to stronger retention but also raising questions about the malleability of memory.

As narratives become hyper-personalized to an individual’s cognitive state, society faces the risk of a fragmented shared reality. In an era of AI-generated, neuro-adaptive narratives, no two people will experience the same story. If the AI tailors the ending of a movie to fit specific biases or preferences, we lose the common cultural ground necessary for social discourse. We risk retreating into niche realities where worldviews are constantly reinforced and never challenged.

The integration of BCI introduces the ultimate privacy dilemma, extending surveillance from what we do to who we are. Neuro-profiling data becomes the most valuable commodity on earth, as story engines learn exactly what scares, excites, or motivates a user based on their brainwaves. Malicious actors could theoretically hack a BCI-enabled narrative to extract sensitive information or even subliminally manipulate a user's opinions. The concept of mental integrity as a human right will likely be a major legal battleground by 2030.

The future:

Monetization is shifting from "ticket sales" to recurring ecosystems.

Experience Passes: These offer access to virtual concerts, persistent game worlds, and social hubs, expected to grow at a 24.76% CAGR.

In-Experience Economy: Engagement drives micro-transactions where users buy agency—unlocking narrative paths, AI companions, or personalized world-states.

Advertising 3.0: Static banner ads are replaced by Native Immersive Advertising, where brands create interactive 3D objects or characters within the story.

The transition to 2030 is not merely a hardware upgrade; it is an ontological shift. We are building a neuro-narrative ecosystem where the boundary between the dreamer and the dream is erased. For creators, the mandate is clear: abandon the script and design the system. The art of the future lies in crafting the rules, the constraints, and the emotional intelligence of the AI agents that will co-create the story with the audience. The Adaptive Reality is coming, and the question is no longer if it will happen, but who will write the code that governs it.

Neuro-Narrative Convergence (2025–2030): Research Essay on Future Potential.

Hosie, A. (2025): Perceived Value Is the Only Value That Matters

Bain & Company (2025): The New Era of Immersive Entertainment

Solarflare Studio (2025): April 2025 – Emerging Tech Report

Telefónica (2025): XR: Expectations for 2025

World Economic Forum (2024): Brain-Computer Interface Market Risk Report

arXiv (2025): Multi-Actor Generative AI as a Game Engine

Ericsson (2030): 10 Hot Consumer Trends – The Internet of Senses